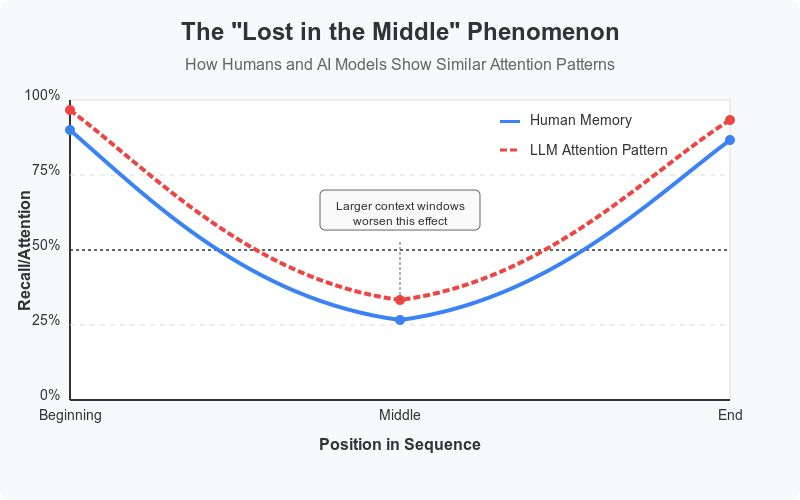

TL;DR: Both humans and AI models struggle to remember information in the middle of long content—they recall the beginning and end much better. Surprisingly, this problem actually gets worse as AI’s context windows grow larger.

The Serial Position Effect

Ever notice how you remember the first and last parts of a movie or presentation, but the middle gets blurry? Scientists call this the “Serial Position Effect”—and surprisingly, AI language models exhibit the same behavior.

Understanding Context Windows

Today’s Large Language Models like ChatGPT and Claude process text through what we call a “context window”—think of it as the AI’s short-term memory, or how much content it can process at once. These context windows are measured in “tokens” (roughly 3/4 of a word in English):

- 2020: Early models handled ~2,000 tokens (about 2 pages of text)

- Early 2025: Advanced models process 100,000+ tokens (over 70 pages)

- Google’s Gemini: Capable of processing 1,000,000 tokens (~750,000 words)

- Meta’s Llama 4: Can handle up to 10,000,000 tokens (over 4.5 million words!)

Note: Token calculations vary by model—there isn’t a standard number.

The Paradox of Growth

This explosive growth means AI can now read entire libraries at once! Yet despite these incredible improvements, they still have a blind spot: They pay more attention to information at the beginning and end, while the middle gets less focus—creating a U-shaped attention pattern just like humans.

Why Does This Happen in AI Models?

While humans forget due to cognitive limitations (the natural constraints of how our brains work), AI models struggle with the middle for technical reasons:

- Attention mechanisms that help AI focus on relevant information become strained when processing very long texts

- They naturally prioritize beginning and end positions

- Larger context windows require more compute power (GPU resources)

- As context windows expand, the problem often worsens—the “middle” becomes significantly larger while start and end attention remains strong

Strategies to Bypass This Limitation

Here are suggestions to avoid this attention pattern:

When asking AI for help: Place your most important instructions at the beginning or end of the context window (at the start of the chat or when it warns you before reaching the limit). Don’t let critical details get buried in the middle.

The Bottom Line

Even the newest AI models with massive context windows still show this pattern—a fascinating reminder that LLMs have human-like limitations. Research teams at leading AI labs continue to explore alternative architectures that might eventually overcome this constraint.

Now that you’ve reached the end of the post, congratulations—you have an excellent attention span! 😁

Leave a Reply